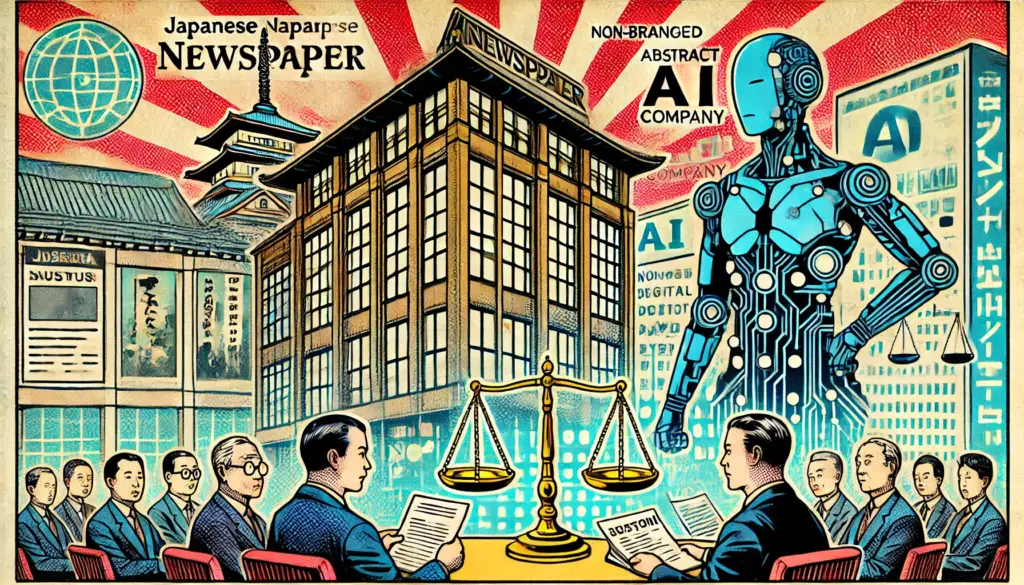

In an unprecedented move, one of Japan’s largest newspaper publishers has taken legal action against a rising AI startup from the U.S. The case marks a turning point not just in Japanese media history, but also in the global debate surrounding AI and intellectual property.

On August 7, three branches of a major Japanese newspaper group jointly filed a lawsuit in Tokyo District Court, demanding the suspension of an AI-powered search service developed by a U.S. startup and seeking over ¥2.1 billion (approx. $13 million USD) in damages. Their claim? That the AI service had used their news articles without permission.

This is the first lawsuit in Japan where a mainstream media outlet has legally challenged a generative AI company over unauthorized use of copyrighted material.

🧠 What Is Perplexity and Why Is It in Trouble?

Perplexity is an emerging AI-powered search engine that delivers conversational, summarized answers to user queries by scanning content across the web. It offers an alternative to traditional search by synthesizing information rather than linking to original sources.

However, this innovation has sparked serious concerns among content creators—particularly those in journalism. News outlets have begun noticing that their original articles appear to be replicated or summarized in Perplexity’s responses, without any formal agreement or payment.

Japanese publishers argue that this practice constitutes copyright infringement, particularly when AI tools monetize such summaries or use them to train their models. The latest lawsuit adds fuel to this growing international fire.

🔥 A Global Trend Reaches Japan

Globally, legal action against AI platforms has been intensifying. While similar lawsuits have already occurred in the U.S. and Europe, Japan had remained relatively quiet—until now.

By filing this lawsuit, the Japanese media company joins a wave of traditional publishers seeking to protect their content from what they describe as “unauthorized scraping” and “freeriding” by AI technologies. This legal action signals a shift in Japan’s position: from cautious observer to active participant in the debate over AI ethics and legality.

📰 Why It Matters for Journalism

Journalism thrives on credibility, labor, and accuracy. When AI platforms use journalistic content to train or generate responses without permission, they risk devaluing original reporting.

This lawsuit shines a light on several deeper issues:

- Loss of revenue for news organizations when users rely on AI summaries instead of visiting the original article.

- Lack of accountability when AI-generated answers are incorrect or misleading.

- Disruption of trust, as users may assume the AI responses are endorsed by the original news source.

The rise of AI tools that mimic journalism without the same ethical standards could pose a threat to the entire ecosystem of reliable information.

⚖️ Legal Grey Zones: Is AI Just “Reading the Web”?

One of the key debates centers around “fair use” and whether AI companies can legally ingest and summarize content that is publicly accessible.

Some AI firms argue that because news content is available online, it is fair game for training models or generating answers. Others counter that public availability does not equate to legal reuse, especially when it involves commercial gain or large-scale replication.

Japanese copyright law, while traditionally strict, had not been tested in the context of generative AI until now. The outcome of this case may set new legal precedents within Japan—and possibly influence global norms.

💬 Independent Commentary: A Necessary Confrontation?

From an ethical and practical standpoint, this confrontation may be inevitable. As generative AI matures, it must reconcile with industries built on intellectual effort—especially journalism.

Some key perspectives worth noting:

- Innovation needs collaboration, not exploitation: AI developers must begin forging real partnerships with content creators. The model of “scrape now, settle later” is unsustainable.

- Transparency builds trust: AI platforms should clearly disclose sources and allow media companies to opt out of content training or display.

- Creators deserve compensation: If AI services are profiting from journalism, then journalists and publishers should have a seat at the table.

Ultimately, this case isn’t just about one newspaper or one AI startup. It represents a cultural and technological crossroads where information, value, and ethics collide.

🔮 What Comes Next?

Depending on the court’s decision, several outcomes are possible:

- The AI company may be forced to halt its use of Japanese media content or negotiate a licensing deal.

- Other Japanese publishers may follow suit, leading to a cascade of similar lawsuits.

- AI platforms may revise their technology, opting to link rather than summarize—or shift toward paying content providers for access.

- Japan’s legal system may introduce clearer AI copyright guidelines, setting a regional standard in Asia.

One thing is certain: the conversation has begun, and it will not end here.

🧩 Final Thoughts: A Call for Balance

Technological disruption has always challenged established industries. The difference with AI is its speed—and its scale. If left unchecked, it may undermine the very sources it depends on.

Rather than resist innovation, the media industry can embrace AI—but on terms that protect creative value. Likewise, AI developers must understand that transparency, fairness, and accountability are not optional—they’re essential.

This lawsuit may be the first of its kind in Japan, but it won’t be the last. The path forward depends on how both sides choose to respond: with confrontation, or collaboration.